Head-pose and Gazing Patterns

The aim of this project is to understand human head-pose patterns for social signal processing. Human head pose is an interesting problem in computer vision. It gives us important meta-information about communicative gestures, salient regions in a scene, group detection, crowd behavioural dynamics, anomaly detection etc. The grand aim of our work is to exploit the meta information from head pose to do Social Signal Processing. Traditionally the problem has been approached from two different and irreconcilable directions. One family of approaches exploited the high resolution data typical of Human Machine Interaction scenarios, and the other worked with the low resolution surveillance domain data. These approaches have very little in common though they are trying to solve similar problems.

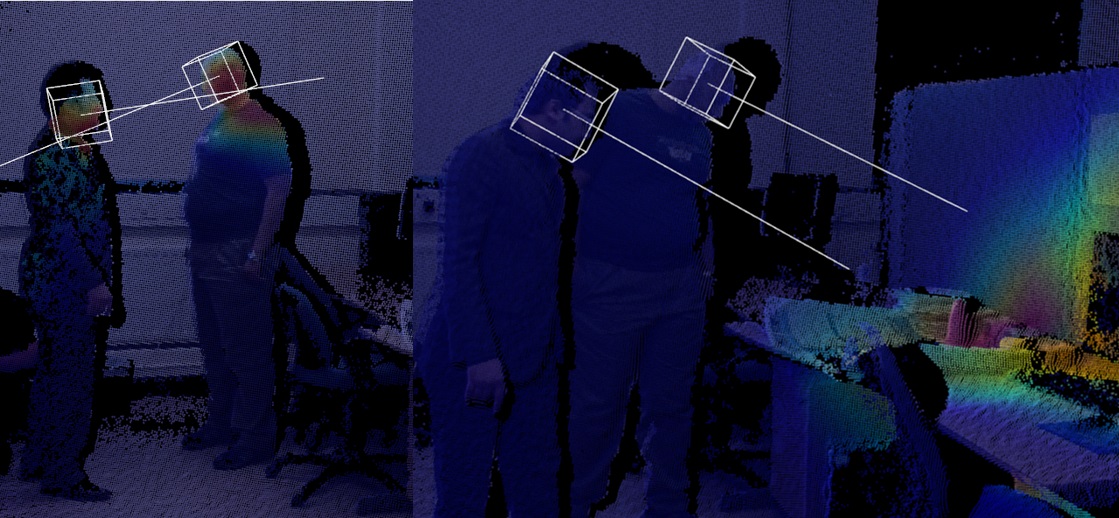

One of the main insights we gained was that it is possible to learn features that are not based on facial landmarks, and they perform similarly to HCI techniques for high resolution data and degrade gracefully with distance all the way upto low resolution surveillance domain data. This representation along with Gaussian Process Regression (GPR), helps us do head pose quaternion regression. We also define a unified approach to model the output of this gaze direction as a 3 dimensional wrapped normal distribution based on the quaternion. Along with studies on independence of eye movement, we can define a robust probabilistic attention metric that we can then project on to the surroundings. The following image shows the attention metric on the environment.

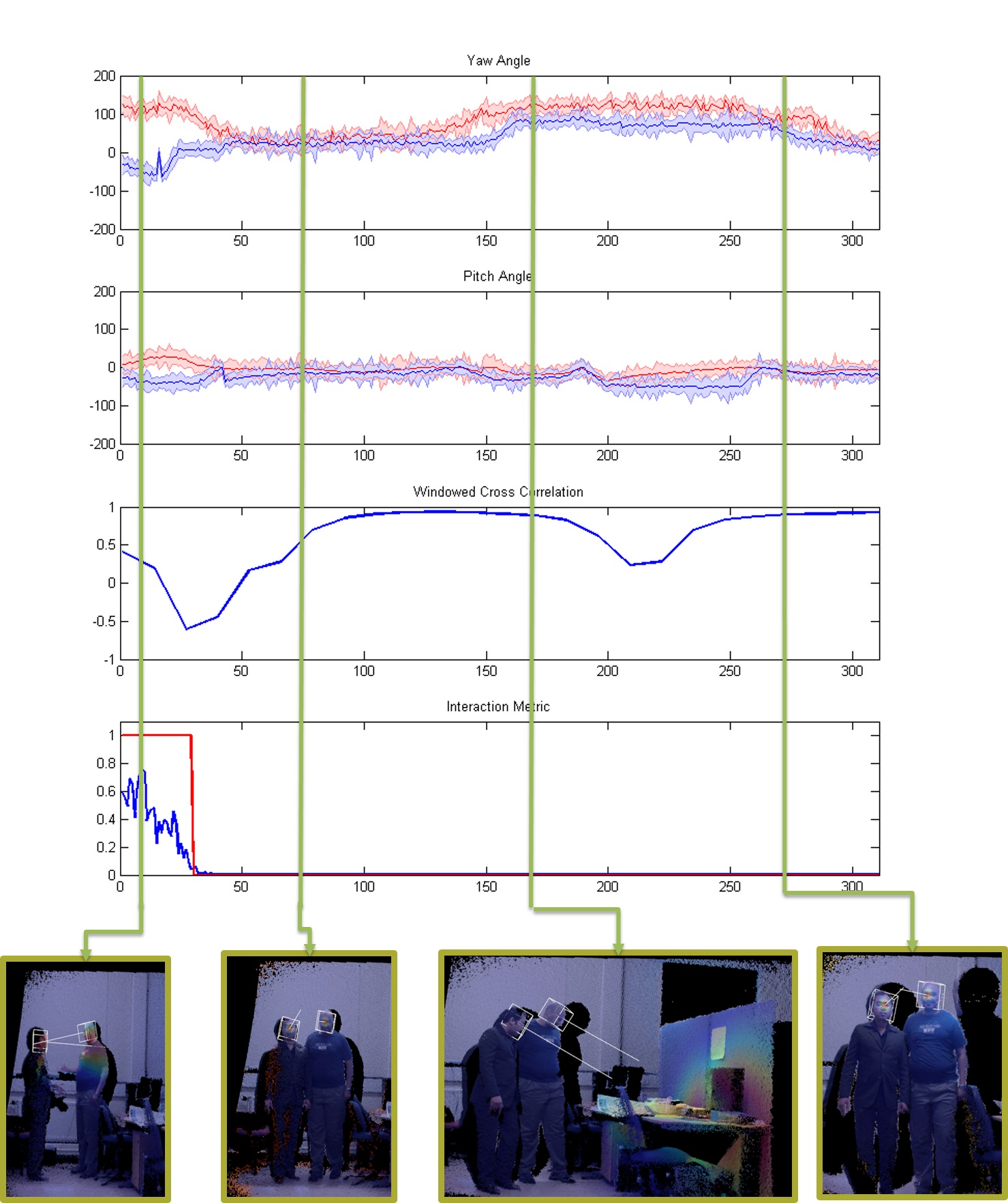

Finally, having a full probability distribution helps us do tracking in an unified quaternion based unscented kalman filter framework with instantaneous measurement variance estimate provided by the GPR. This has been shown to improve the performance significantly when a temporal sequence is available. The usefulness of this whole framework has been validated on extracting "Patterns of Life" signals like human-human interactions, social mimicry from recorded data. The following graph shows a scenario where two people are initially interacting (The Interaction signal pops out) and then they move together in a synchronised manner where one person essentially mimics the action of the other. This culminates at a point where both of their attentions have been drawn to a certain part of the scene and they look at it together (The temporal cross correlation shoots up).

Publications:

- An adaptive motion model for person tracking with instantaneous head-pose features. R H Baxter, M J V Leach, S S Mukherjee, N M Robertson, IEEE Signal Processing Letters, Vol. 22, Iss. 5, p578-582, 2015. doi: 10.1109/LSP.2014.2364458