Computer vision algorithms on processing platforms for real-time applications

My EngD research involved methods of implementing computer vision algorithms on

processing platforms for real-time applications, including on FPGA and GPU, using

model-based design and automatic code generation where possible.

Using a heterogeneous system containing a FPGA, GPU and

CPU, specific implementations of object detection algorithms can be chosen

on-the-fly in response to environmental changes within a scene. This allows

dynamic optimisation to meet changing power, speed or accuracy goals and is more

flexible and more capable of responding to environmental changes than one based on a

processor and single-accelerator model. We explore the performance of a

single algorithm (HOG) for pedestrian detection in a heterogeneous system below.

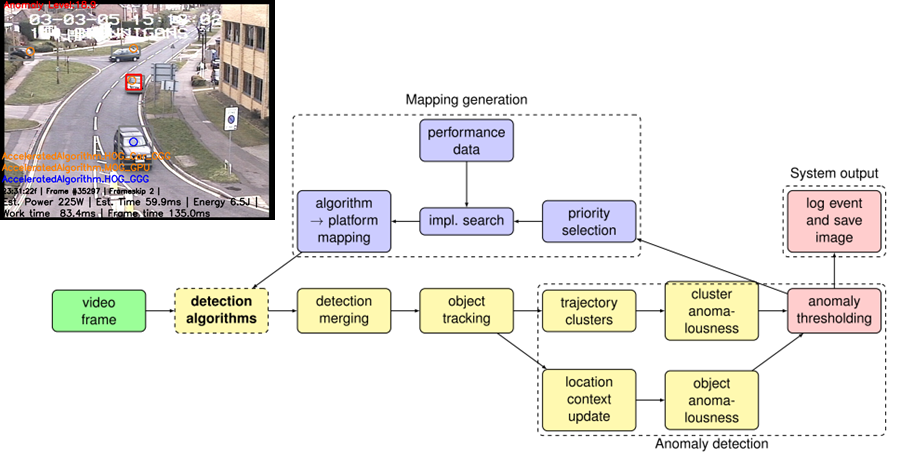

We then apply this knowledge to a scenario involving detection of

anomalous behaviour of people and vehicles. Here, the computationally

expensive detectors are accelerated on FPGA and GPU and we select some

combination of these platforms dynamically, depending on the level of

anomaly seen at any point in a scene.

System block diagram (Heterogeneous object detectors are used):

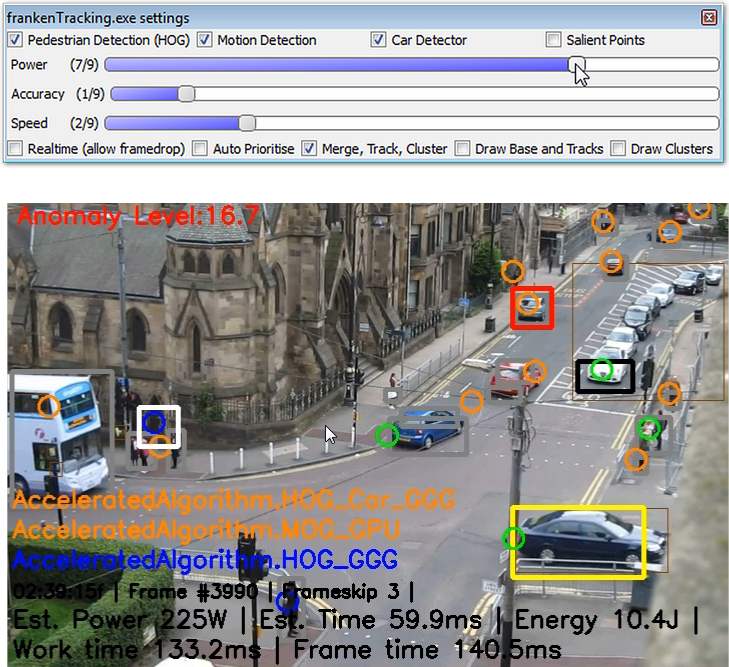

Processing priorities selected algorithmically (as in image) or manually (by slider):

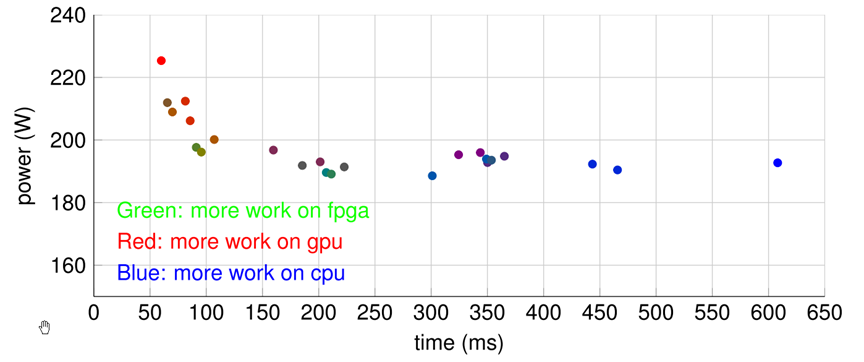

Tradeoff of power against time for different platforms. Best solutions form a Pareto curve:

Publications:

- Introspective Classification for Pedestrian Detection, C. G. Blair, J. Thompson & N. M. Robertson, IEEE Conference on Sensor Signal Processing for Defence (SSPD 2014) [PDF]

- Characterising a Heterogeneous System for Person Detection in Video using Histograms of Oriented Gradients: Power vs. Speed vs. Accuracy, C. Blair, N. M. Robertson, D. Hume, IEEE Journal on Emerging and Selected Topics in Circuits and Systems, 2013 [PDF]