Enhancing object detection methods using scene context

This Engineering Doctorate (EngD) is an industry relevant research project focusing

on object detection and tracking scenarios, specifically in a surveillance based context.

Presently, object detection systems perform poorly in complex and dynamic scenes where

changing illumination or occlusions are frequent. When such systems are employed in defence

or surveillance related tasks, under-performing detectors can have potentially far-reaching

negative effects. The underlying motivation for the project is to improve overall detector

performance in these scenarios, for thermal and visible imagery, via contextual based processing.

The incorporation of context into detection systems will provide additional, scene-specific

information that results in a higher-level scene understanding. This added depth of knowledge

will be a result of a segmentation stage, providing additional information about the foreground

and background of a scene. This will ultimately be utilised to enhance future detections.

The prominent technology produced by this EngD will be a robust detection system, capable of advanced

analysis on challenging image data and region specific processing.

Initial progress has focused on developing the segmentation stage (mentioned above) that incorporates

detection and tracking information for foreground objects present in the scene. The method utilises a

current superpixel algorithm and forms larger regions based on a well-known similarity metric, the

Bhattacharyya distance, with direct influence coming from foreground objects. A contextual framework

encoding temporal and spatial information from previous segmentations can then be used to 'smooth' the

final result. Although this algorithmic method is in early development it has shown some encouraging

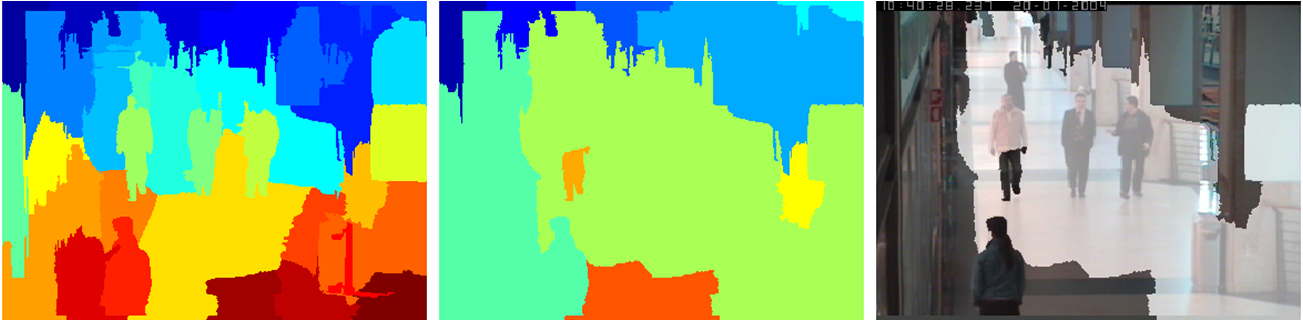

results, as shown on a crowded shopping mall scene from the public 'Caviar' video dataset,:

The first image (LHS) is a segmentation of a frame from the caviar sequence, without any foreground knowledge or contextual smoothing. Without using these it is hard for the algorithm to merge the people with the background concourse to form a contiguous region. The middle image is a segmentation of the same image but using the influence from tracking and context. It is a reduction in superpixels and has formed a good approximation of distinct regions present in the image. The important region is the central concourse present in the middle of the segmentation as this is where the majority of track information was found to be. Lastly, the RHS image shows an overlay of this central region with the original input image. It is effectively a mask showing where we expect to see people in the image, which is only possible using foreground detections and context. The EngD in Optics and Photonics Technologies is a collaboration between Heriot-Watt (HW), Glasgow, St Andrews and Strathclyde Universities, as well as various industrial partners. This project is offered academic support from Heriot-Watt University and sponsored by Thales. Funding is provided by both the Engineering and Physical Sciences Research Council (EPSRC) and Thales. Supervision is provided by Dr Neil. M. Robertson (HW) and Dr Barry Connor (Thales).